Automating deployment using a CI/CD tool

Published 12 September 2025

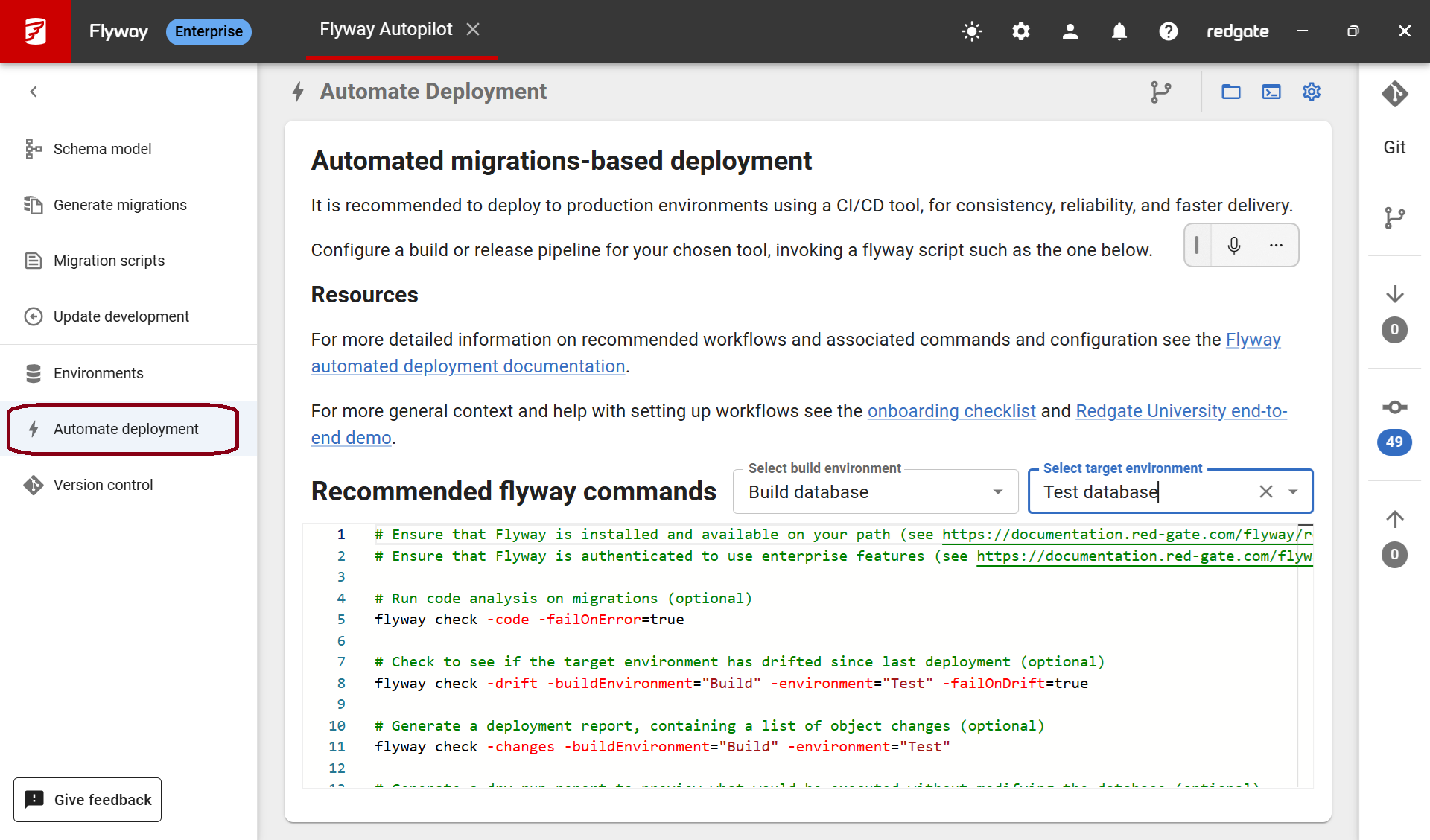

It is recommended to deploy to environments using a CI/CD tool, for consistency, reliability, and faster delivery.

In order to get this set up, you need to work out what Flyway commands you want to run for your workflow and build them into a small script which is configured to run using your chosen CI/CD tool's job/task configuration. So for example, you might just choose to run the flyway migrate command and execute that as bash script within GitHub actions yaml configuration.

Flyway Desktop can help you build the bash script component by generating default scripts which match your project type on the Automated deploy page.

For more help with setting up workflows see the onboarding checklist and Redgate University end-to-end demo. There are also links to example pipelines below,

Recommended build/deployment steps

- Check out scripts from version control

- CI - Build, analyze, and test database changes

- Build - Execute your scripts on a build environment. The build environment could be built from scratch or an existing build environment could be updated. This validates your scripts by making sure they can run successfully.

- Analyze - Code analysis checks - Make sure you're not using deprecated syntax, changing security settings, or dropping data. You can also enforce naming conventions are being followed. Built-in Redgate checks are available with Flyway Enterprise.

- Test - Testing can be unit tests, integration tests, regression tests, performance tests, etc. Having a few smoke tests that run on every build and give quick feedback to ensure the most critical parts of your system are not broken is a good idea.

- Deploy to test environments (optional)

- You may have additional test environments or Quality Assurance (QA)/User Acceptance Testing (UAT) environments that need to be deployed to. Using an automated process helps ensure the consistency of your environments.

- Deploy to a Staging/Pre-Production environment (optional)

- A Staging/Pre-Production environment is as similar to your Production environment as possible. It may even have a copy of Production data, so that the deployment can be tested on as realistic an environment as possible before going to Production.

- Staging/Pre-Production environments can also be used to check how long a deployment will take to run. This information can be used to schedule deployments for off hours or down time as needed.

- Manual intervention before going to Production (optional)

- Review the Change Report to quickly see how many objects will be changed. Drill into a side-by-side difference of each object to understand the changes easily and determine if there's any impact. - Flyway Enterprise

- Review the SQL script - This is the exact script that will be executed on the Production Environment. - Flyway Enterprise

- Check Production for drift - Flyway Enterprise

- Check for drift to make sure no objects have changed in Production since your last deployment. This ensures that the Staging/Pre-Production deployment you did really reflects the deployment to Production and that no Production hot fixes will cause issues with the current deployment.

- Deploy to Production

- Log changes

- Log changes (in Redgate Monitor) and/or in Flyway Pipelines.

State-based deployments

EDITION: ENTERPRISE

State based deployments involve:

- Use the prepare command to generate a deployment script between your schema model and target environment.

- Use the deploy command to execute the deployment script against your target environment.

State-based deployments rely upon Redgate comparison technology and are available for SQL Server, Oracle, PostgreSQL, MySQL, and their cloud variants.

Resources

Migrations-based deployments

Migrations based deployments involve:

- Use the prepare command to generate a deployment script between your migrations and target environment.

- Use the deploy command to execute the deployment script against your target environment.

This will wrap all your pending migration scripts into one deployment script and be executed as one transaction where possible.

You can also use migrate command to apply pending migrations to a target environment. By default, this will run each migration script in it's own transaction. This could be changed with the group setting.

If you need to conditionally execute migrations in an automated pipeline, the best approach is to either use multiple locations or deploy rules. Deploy rules allow for the most fine-grained control - see managing migrations for examples of how to configure them.

Note: If you did not use Flyway Desktop and your downstream databases already have objects in them, then you will need to baseline your downstream environments first. In Flyway Desktop, we set baselineOnMigrate=true and baselineVersion=<your_baseline_version_number> in the project settings so this will automatically be done the first time your pipeline runs. Once your downstream environments are baselined, use these Example CI/CD Pipelines to setup your own pipeline for deploying database changes.

Resources

- Common migrations-based deployment scripts

- Example migrations-based CI/CD pipelines

- Autopilot CI/CD walkthrough

Flyway must be available on your CI/CD agents

In order to use Flyway to deploy your database changes as part of an automated CI/CD pipeline, you need to be able to access Flyway on your CI/CD agents. There are a few ways to do this:

- Use Docker (recommended). This way, you can just pull the latest version or a tagged version into your pipeline when it runs. There's nothing to install/maintain.

- Install the Flyway command line by downloading it and unzipping it to a location on the agent (e.g., C:\Flyway). See this tutorial for scripts to do this.

- Commit the unzipped flyway command line files into your version control system. Your CI/CD agents can checkout the repository with the necessary flyway files to execute the flyway commands from there. When upgrading, just commit the latest flyway release into your repository and it will be used in future pipelines.

- Use Chocolatey or another package management system.

Authenticate to use Flyway Enterprise features

Authentication in a pipeline requires non-interactive authentication.

Online non-interactive authentication

Access Token (PAT)

You can create a token in the Redgate portal and use that with a registered email address to authenticate Flyway. You might want to setup a service account associated with the flyway commands being called from CI/CD so that it's not tied to a particular end user.

This can be achieved using the email parameter and the token parameter. These can be set via environment variables.

Using a personal access token will store a license permit in the Redgate app data folder, the same location as when running the Auth command in interactive authentication.

Offline non-interactive authentication

These work without contacting Redgate (e.g. air-gapped pipelines) and so will expire at renewal time.

This will require that you update your pipeline to refresh the key/permit to continue working.

Non-interactive authentication is achieved using license permits or license keys (deprecated).

Connecting to databases in CI/CD pipelines

For connecting to target databases in a CI/CD pipeline, secrets may need to be passed around, including parameters such as username and password.

The exact mechanism will vary depending on the CI/CD tool, Flyway also comes with native support for some secret management stores.

Implementing a rollback strategy

You may want to consider implementing a rollback strategy so there is a known process for handling any failed deployments.

Switching from a state-based to migrations-based deployment

There are a few edge cases that state-based deployments do not handle well. A few are examples:

- Updating transactional data

- Adding a new NOT NULL column without a default value

- Renaming a table

- Splitting a table

- Renaming a column

- Splitting a column

These can be worked around with pre/post deployment scripts, but if you find yourself needing to workaround these a lot, or if you just want to guarantee that this exact script will be executed on your downstream environments, then moving to a migrations-based approach is an option.

It is possible to convert from a state-based pipeline to a migrations-based pipeline since the prepare and deploy commands can be used for both state and migrations. See this tutorial.